Following my post on the recent debate about being overweight, my attention was drawn to a recent systematic review of the long-term effects of breastfeeding published by the World Health Organisation (WHO). The report raises some related issues, and some that are interesting in their own right.

The review suggests the long-term benefits of breast-feeding do not seem especially dramatic. This is in contrast to the attitude to breast-feeding in some, but by no means all, developed countries, where breast-feeding is strongly encouraged. This raises the question as to why it is so strongly encouraged, and why parents should feel “guilt” (as one news report puts it) if they do not breast-feed.

Two points strike me about this. First, as in the discussion about the correct message on being overweight, there may be an argument that the simple message is the right one even if it isn’t accurate. On this line of argument – which I don’t endorse – the message “breast is best” ought to be put about even if the reality is more complicated. Maybe high quality formula made with sterilised water will not be significantly worse for a healthy baby than breast milk (goes this line of thought); however, there are many situations where breast-feeding can make the difference between life and death – where water quality is dubious, and where high quality formula is too expensive, for example. So better to stick with “breast is best”, and let some conscientious persons endure guilt that is strictly unnecessary. Besides, there is no evidence that breast is not best – only that it not make as much of a difference as that slogan may suggest.

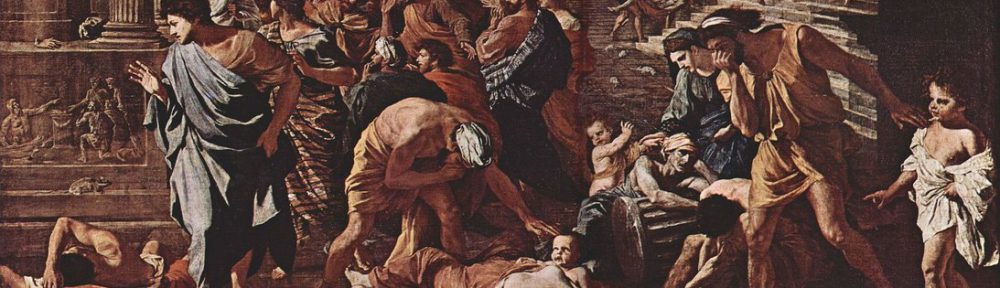

As in the case of the message on being overweight, I think this argument is not a good one. A distinction must be drawn between necessary simplification for communication purposes, which we all do, all the time; and making assertions that go beyond the evidence. Perhaps we all do that too, but we shouldn’t. The worrying possibility that this systematic review raises is that the breast-feeding drive in some countries – the UK is one I know a little about – has gone well beyond the evidence that would justify it, which presumably means it is driven by ideology or conviction more than by evidence. The persistence and dangers of medical convictions, albeit deeply and honestly held, are well-known, most famously associated with the practice of blood-letting, and most recently highlighted by the evidence-based medicine movement.

The second point occurring to me is that there is no study that can settle the question of what is best for a given child. The slogan “breast is best” is ambiguous, because “best” can be read in more than one way. It could mean nutritionally best, in which case, the study seems to confirm that the slogan is true. Or it could mean best for the child, all things considered. In many situations, the two go together, especially in low-resource settings for reasons previously mentioned. But they are not the same. In higher-resource settings, it is easy to imagine breast-feeding having considerable costs for a child, all things considered. A breast-feeding mother may be less able to pursue her career, which may affect household income; and that appears to be an important social determinant of health. The psychological effect on the mother of forsaking professional opportunities might also have an impact on the child and the family as a whole. This will obviously depend on the particular mother and on the particular family. Some mothers might be in a position to balance demands; some may not have or want jobs or careers of a sort that would be impacted. But there are at least some women who see motherhood as forcing them to make sacrifices in their professional lives. Bottle-feeding may reduce the extent of these sacrifices, making it possible for other people to look after the child during the day, and indeed the night. In a nutshell, one size doesn’t fit all, contrary to what the “breast is best” slogan suggests. It could be that bottle, not breast, is best for a particular child, when all the circumstances are considered.

The review raises this possibility because the size of the long-term advantage of breast-feeding appears to exist, but to be small. For example, there appears to be a positive causal link between breast-feeding and IQ. But the size is small, and as the report points out, it isn’t clear how much of an advantage a small improvement in performance on intelligence tests really is (or really indicates, perhaps). It is easy to imagine a small advantage in intelligence being outweighed by factors such as having a higher household income, or simply having a happier mother. And of course if we expand the scope of outcomes beyond the strictly health-related then the imaginative task becomes even easier.

These issues are complex. Nonetheless it seems to me that there is a difference between simplifying and distorting. Breast-feeding makes significant demands of a particular individual (the mother), which are in part justified by the health benefits for another (the child). Because these demands are so significant, and because the moral compulsion involved is so strong, it cannot be right to overstate the advantages of breastfeeding relative to nearly-as-good alternatives, where these are available. Nor can it be right to ignore the possibility of socially mediated health effects of breastfeeding (household income, family happiness, etc.) and focus exclusively on nutritional effects. The slogan “breast is best” may be appropriate in areas riddled with cholera and dysentery (I make no judgement about that) but I doubt that it is justified in, for example, the UK, and relevantly similar countries.